PARTICIPATORY EVALUATION: DESIGN, BRICOLAGE AND PAYING ATTENTION TO RIGOUR

In the IDS opinions blog, Marina Apgar reflects on her learning and inspiration from the DeGEval Spring Conference 2023, notably the PIALA workshop organised by Collaborative Impact.

I have been to my fair share of evaluation conferences. Each has something to offer. I usually leave feeling pleased that at the least I’ve added a few potential new tools to my toolbox, but when they are really good, they leave me pondering new questions and with that buzz you get from geeking out with colleagues who care as much as you do for methodological meanderings.

The spring conference of the DeGEval Working Group for Development Cooperation and Humanitarian Aid delivered on both of these expectations, but it did something else as well, it left me feeling more positive than I often do about the possibility of practicing what we preach – of learning from the past in order to do better in the future.

The theme of the conference was Participatory Evaluation. Through a day and half of plenaries, world cafes, open space, workshops and a final fishbowl – about 60 participants from civil society, consultancies and donors came together in the beautiful Stuttgart Palace at the University Hohenheim and hosted by FAKT Consult to explore what participatory evaluation is and how to build rigour into our practice. I have been discussing this topic with DEval and their partners Evalparticipativa for some years, so this was an opportunity to learn about the German evaluation scene, as part of our ongoing engagement with European partners and funders linked with our work at the Centre for Development Impact.

What follows is some of the learning and inspiration I took with me into the summer heat

The practice of participatory monitoring and evaluation is enduring and evolving. Enduring because even after (quite lengthy) periods in evaluation when there was little room for participation, some committed practitioners have maintained pockets of practice alive, often against the odds. And rather than simply a story of survival, what I heard from participants was a story of continued innovation, using old tools in new ways, such as the Method for Impact Assessment of Projects and Programmes (MAPP) presented by the brilliant Regine Parkes and developed by Susanne Neubert and applied in different evaluations by Bernward Causemann and FAKT Consult colleagues, and its associated toolbox of ‘tiny tools’ which builds on Participatory Rural Appraisal (PRA) tools and echoes thinking around ‘rapid’ methods that produce timely, relevant and actionable knowledge. I was particularly taken by the deep thinking behind how to sequence and combine different methods with careful attention to overall participatory design.

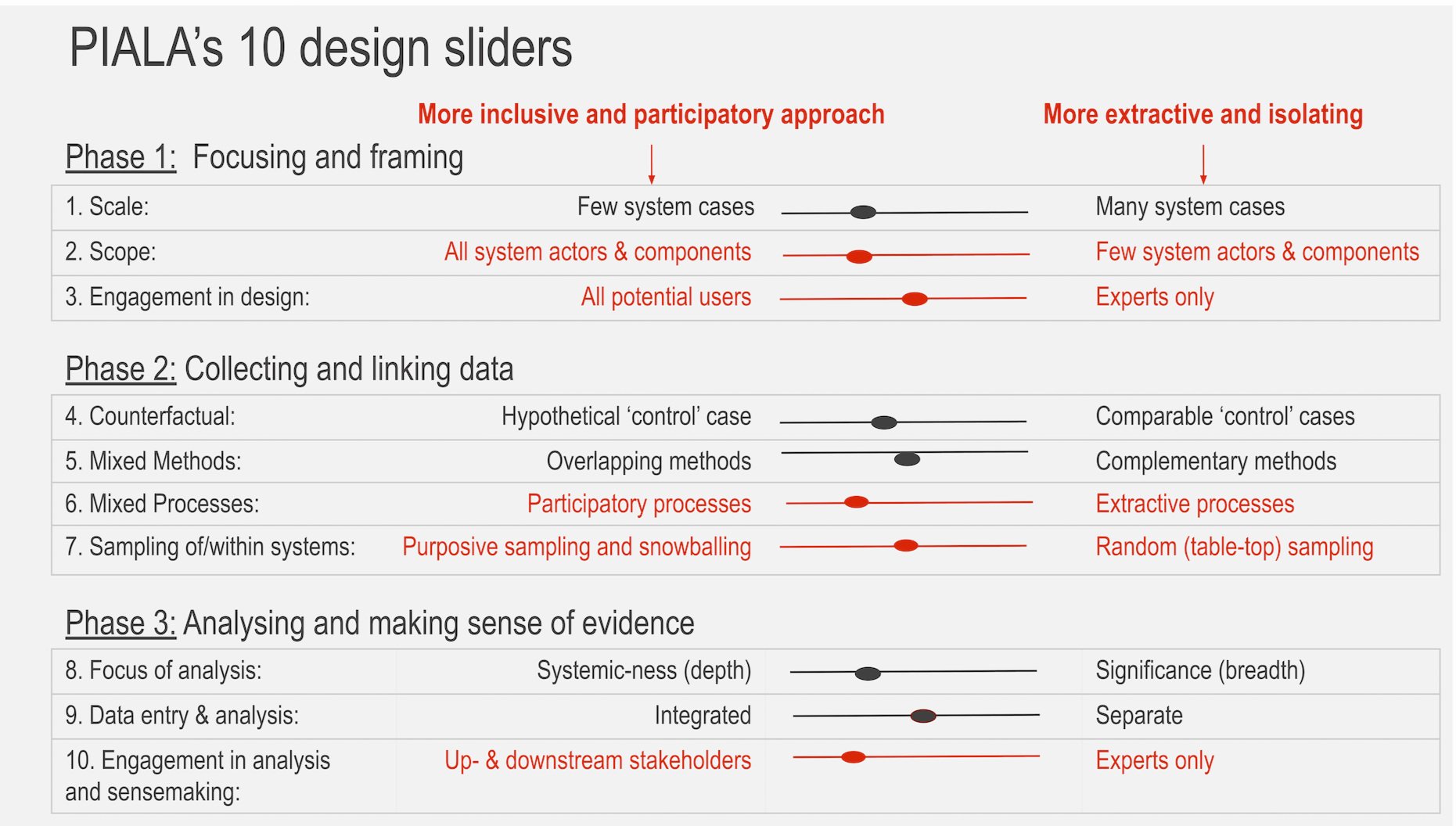

In this moment of attention to decolonisation and movements to localise M&E, the discussions suggested that we can make good on our past learning, to avoid the known pitfalls of homogenisation and co-option by making sure we move beyond a focus on the tools alone to embrace the participatory design process and all the messiness that comes with it. In a workshop on the PIALA approach to impact evaluation by Adinda van Hemelrijck and Eva Wuyts of Collaborative Impact, this was brought to life through group discussions on how to keep a decision space for participation alive throughout the evaluation process.

The point I often find myself repeating is that participation is not something that happens in a ‘moment’ but rather is an aspiration to hold on to throughout. The ten ‘design sliders’ of PIALA (shown in image) are a smart way to bring this deliberative process to life. We made this practical through exploring how these sliders helped a specific case study evaluation team to make choices about appropriate levels of participation of different stakeholders throughout.

While the extremes of ‘participatory’ on the left and ‘extractive’ on the right at first feel uncomfortable, and some of us are naturally drawn to the left (making it seem a normative choice), the exercise helped us grapple with one the hardest questions of all in participatory evaluation – how much is ‘enough’ at any point in time and for which stakeholder(s)? In the pragmatic science of evaluation, the point is not to be idealistic, but to make thoughtful choices. And I was excited to reconnect with how PIALA proposes to balance inclusiveness, rigour and feasibility illustrating the tensions or trade-offs we face.

What was perhaps the most exciting aspect of these conversations is that both the PIALA offerings on decision making, and reflecting back on use of multiple PRA tools within an intentionally designed evaluation process such as MAPP illustrate how the practice of methodological bricolage is necessary to ensure quality and rigour in participatory evaluation. In my keynote presentation I briefly mentioned recent thinking about methodological bricolage and the nascent inclusive rigour framework as tools to help us tell the true stories of our practice.

This seemed to strike a chord with the audience, leading to an additional open space session to reflect more on these ideas. Some francophone participants challenged me on the term bricolage – suggesting that for them it has connotations of messiness and ‘anything goes’ which is precisely what we do not want. Amongst the enthusiasm there was some healthy scepticism about what using language of bricolage might enable. One of the hardest questions I was asked was about efficiency and feasibility – is there risk that bricolage could lead to unnecessarily complex evaluations that hit up against the real-life constraints of a pragmatic practice? My response is that if we get better at reflecting on how to be intentional about methodological bricolage in order to enhance rigour, we may in fact be able to reduce inefficiency by bricolaging in ways that matter.

In our final fish-bowl useful reflections on where we need to keep the conversation going were shared. I admit to feeling frustrated that discussions about participatory evaluation tend to still be disconnected from conversations about causal analysis. The term ‘causal’ remains unhelpfully colonised by counterfactual logics, and when pushed on ‘rigour’, participatory evaluators often respond with ‘well you can’t use these participatory tools on their own, you need to mix them with quantitative tools’. The sticking point seems to be the old yet enduring assumption that qualitative data about lived experience, often in the form of stories, is not suitable to support causal inference. What would be exciting to dive into in our next conversation is how different causal frameworks, most famously elaborated in the so called Stern review (a watershed moment for broadening evaluation design in the UK aid context), can be applied to, supported by and enhanced through the use of participatory evaluation approaches and methods.

The point is that no single method (quant, qual or participatory) can, on its own, generate robust-enough evidence for plausible causal inference in complex environments. This is one of the angles of the nascent causal pathways initiative, taking advantage of the momentum in US philanthropy around equity-oriented evaluation, while embracing complexity and uncertainty in achieving systems change. A second area for follow up was a vibrant topic of discussion throughout various sessions and some consider the holy grail of participatory research – how do we move our practice to meaningful participatory analysis and sensemaking? In the context of large evaluations with large amounts of qualitative and participatory data the question of how much participation is possible and sufficient in analysis remains a challenging one to answer.

Read Adinda’s response on LinkedIn…